Over three years ago now, I posted a three-part series on splitting strings:

- Split strings the right way – or the next best way

- Splitting Strings : A Follow-Up

- Splitting Strings : Now with less T-SQL

Then back in January, I took on a slightly more elaborate problem:

Throughout, my conclusion has been: STOP DOING THIS IN T-SQL. Use CLR or, better yet, pass structured parameters like DataTables from your application to table-valued parameters (TVPs) in your procedures, avoiding all the string construction and deconstruction altogether – which is really the part of the solution that causes performance problems.

And then SQL Server 2016 came along…

When RC0 was released, a new function was documented without a lot of fanfare: STRING_SPLIT. A quick example:

SELECT * FROM STRING_SPLIT('a,b,cd', ','); /* result: value -------- a b cd */

It caught the eyes of a few colleagues, including Dave Ballantyne, who wrote about the main features – but was kind enough to offer me first right of refusal on a performance comparison.

This is mostly an academic exercise, because with a hefty set of limitations in the first iteration of the feature, it is probably not going to be feasible for a large number of use cases. Here is the list of the observations that Dave and I have made, some of which may be deal-breakers in certain scenarios:

- the function requires the database to be in compatibility level 130;

- it only accepts single-character delimiters;

- there is no way to add output columns (like a column indicating ordinal position within the string);

- related, there is no way to control sorting – the only options are arbitrary and alphabetical

ORDER BY value;

- related, there is no way to control sorting – the only options are arbitrary and alphabetical

- so far, it always estimates 50 output rows;

- when using it for DML, in many cases you will get a table spool (for Hallowe'en protection);

NULLinput leads to an empty result;- there is no way to push down predicates, like eliminating duplicates or empty strings due to consecutive delimiters;

- there is no way to perform operations against the output values until after the fact (for example, many splitting functions perform

LTRIM/RTRIMor explicit conversions for you –STRING_SPLITspits back all the ugly, such as leading spaces).

So with those limitations out in the open, we can move on to some performance testing. Given Microsoft's track record with built-in functions that leverage CLR under the covers (cough FORMAT() cough), I was skeptical about whether this new function could come close to the fastest methods I'd tested to date.

Let's use string splitters to separate comma-separated strings of numbers, this way our new friend JSON can come along and play too. And we'll say that no list can exceed 8,000 characters, so no MAX types are required, and since they're numbers, we don't have to deal with anything exotic like Unicode.

First, let's create our functions, several of which I adapted from the first article above. I left out a couple that I didn't feel would compete; I'll leave it as an exercise to the reader to test those.

Numbers Table

This one again needs some setup, but it can be a pretty small table due to the artificial limitations we're placing:

SET NOCOUNT ON; DECLARE @UpperLimit INT = 8000; ;WITH n AS ( SELECT x = ROW_NUMBER() OVER (ORDER BY s1.[object_id]) FROM sys.all_objects AS s1 CROSS JOIN sys.all_objects AS s2 ) SELECT Number = x INTO dbo.Numbers FROM n WHERE x BETWEEN 1 AND @UpperLimit; GO CREATE UNIQUE CLUSTERED INDEX n ON dbo.Numbers(Number);

Then the function:

CREATE FUNCTION dbo.SplitStrings_Numbers ( @List varchar(8000), @Delimiter char(1) ) RETURNS TABLE WITH SCHEMABINDING AS RETURN ( SELECT [Value] = SUBSTRING(@List, [Number], CHARINDEX(@Delim, @List + @Delim, [Number]) - [Number]) FROM dbo.Numbers WHERE Number <= LEN(@List) AND SUBSTRING(@Delim + @List, [Number], 1) = @Delim );

JSON

Based on an approach first revealed by the storage engine team, I created a similar wrapper around OPENJSON, just note that the delimiter has to be a comma in this case, or you have to do some heavy duty string substitution before passing the value into the native function:

CREATE FUNCTION dbo.SplitStrings_JSON ( @List varchar(8000), @Delimiter char(1) -- ignored but made automated testing easier ) RETURNS TABLE WITH SCHEMABINDING AS RETURN (SELECT value FROM OPENJSON('[' + @List + ']'));

XML

CREATE FUNCTION dbo.SplitStrings_XML ( @List varchar(8000), @Delimiter char(1) ) RETURNS TABLE WITH SCHEMABINDING AS RETURN (SELECT [value] = y.i.value('(./text())[1]', 'varchar(8000)') FROM (SELECT x = CONVERT(XML, '<i>' + REPLACE(@List, @Delimiter, '</i><i>') + '</i>').query('.') ) AS a CROSS APPLY x.nodes('i') AS y(i));

CLR

I once again borrowed Adam Machanic's trusty splitting code from almost seven years ago, even though it supports Unicode, MAX types, and multi-character delimiters (and actually, because I don't want to mess with the function code at all, this limits our input strings to 4,000 characters instead of 8,000):

CREATE FUNCTION dbo.SplitStrings_CLR ( @List nvarchar(MAX), @Delimiter nvarchar(255) ) RETURNS TABLE ( value nvarchar(4000) ) EXTERNAL NAME CLRUtilities.UserDefinedFunctions.SplitString_Multi;

STRING_SPLIT

Just for consistency, I put a wrapper around STRING_SPLIT:

CREATE FUNCTION dbo.SplitStrings_Native ( @List varchar(8000), @Delimiter char(1) ) RETURNS TABLE WITH SCHEMABINDING AS RETURN (SELECT value FROM STRING_SPLIT(@List, @Delimiter));

Source Data & Sanity Check

I created this table to serve as the source of input strings to the functions:

CREATE TABLE dbo.SourceTable ( RowNum int IDENTITY(1,1) PRIMARY KEY, StringValue varchar(8000) ); ;WITH x AS ( SELECT TOP (60000) x = STUFF((SELECT TOP (ABS(o.[object_id] % 20)) ',' + CONVERT(varchar(12), c.[object_id]) FROM sys.all_columns AS c WHERE c.[object_id] < o.[object_id] ORDER BY NEWID() FOR XML PATH(''), TYPE).value(N'(./text())[1]', N'varchar(8000)'),1,1,'') FROM sys.all_objects AS o CROSS JOIN sys.all_objects AS o2 ORDER BY NEWID() ) INSERT dbo.SourceTable(StringValue) SELECT TOP (50000) x FROM x WHERE x IS NOT NULL;

Just for reference, let's validate that 50,000 rows made it into the table, and check the average length of the string and the average number of elements per string:

SELECT [Values] = COUNT(*), AvgStringLength = AVG(1.0*LEN(StringValue)), AvgElementCount = AVG(1.0*LEN(StringValue)-LEN(REPLACE(StringValue, ',',''))) FROM dbo.SourceTable; /* result: Values AvgStringLength AbgElementCount ------ --------------- --------------- 50000 108.476380 8.911840 */

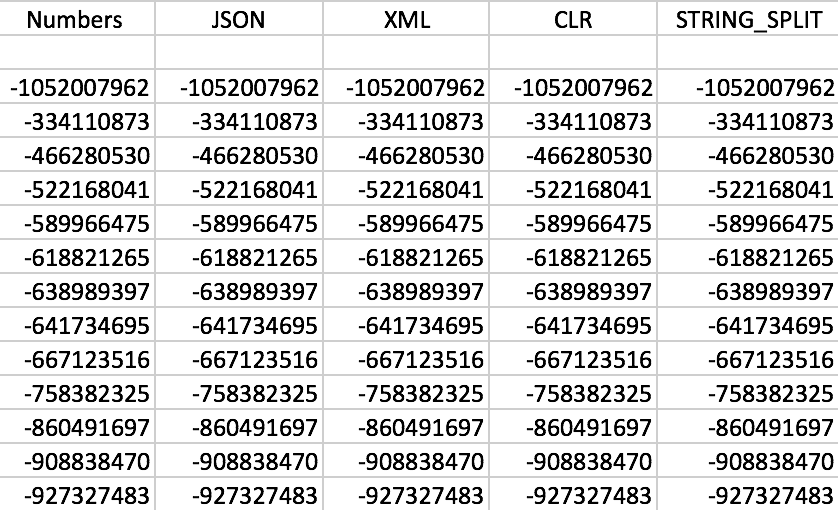

And finally, let's make sure each function returns the right data for any given RowNum, so we'll just pick one randomly and compare the values obtained through each method. Your results will vary of course.

SELECT f.value FROM dbo.SourceTable AS s CROSS APPLY dbo.SplitStrings_/* method */(s.StringValue, ',') AS f WHERE s.RowNum = 37219 ORDER BY f.value;

Sure enough, all the functions work as expected (sorting isn't numeric; remember, the functions output strings):

Sample set of output from each of the functions

Sample set of output from each of the functions

Performance Testing

SELECT SYSDATETIME(); GO DECLARE @x VARCHAR(8000) = ( SELECT f.value FROM dbo.SourceTable AS s CROSS APPLY dbo.SplitString_/* method */(s.StringValue,',') AS f ); GO 100 SELECT SYSDATETIME();

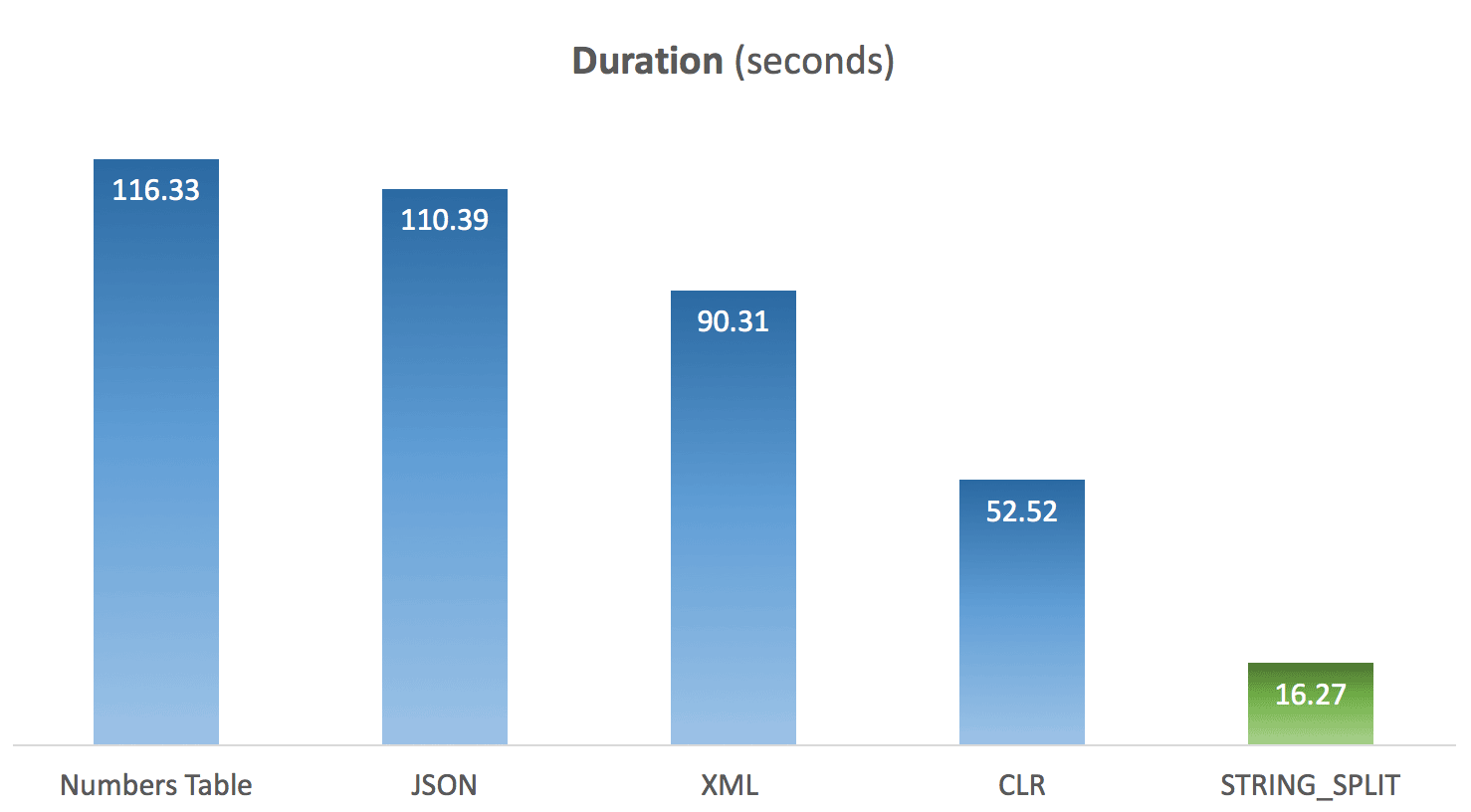

I ran the above code 10 times for each method, and averaged the timings for each. And this is where the surprise came in for me. Given the limitations in the native STRING_SPLIT function, my assumption was that it was thrown together quickly, and that performance would lend credence to that. Boy was the result different from what I expected:

Average duration of STRING_SPLIT compared to other methods

Average duration of STRING_SPLIT compared to other methods

Conclusion

While custom CLR still shows a huge advantage over traditional T-SQL approaches, and using JSON for this functionality appears to be nothing more than a novelty, STRING_SPLIT was the clear winner – by a mile. So, if you just need to split a string and can deal with all of its limitations, it looks like this is a much more viable option than I would have expected. Hopefully in future builds we'll see additional functionality, such as an output column indicating the ordinal position of each element, the ability to filter out duplicates and empty strings, and multi-character delimiters.

The post Performance Surprises and Assumptions : STRING_SPLIT appeared first on SQLPerformance.com.